Open-source distributed OLAP database and powerful SaaS counterpart shine with plug-in indexes, signature tunable star-tree index, and ability to combine batch data with streaming data.

I started using APL for data analysis around 1972. The powerful language, based on a 1962 book by Ken Iverson, A Programming Language, was and is notable for three things: its use of Greek letters and mathematical symbols, requiring a special APL keyboard; its compact, nearly “write-only” notation; and its multidimensional arrays. The latter provided the foundation for online analytical processing (OLAP) databases. Iverson received a Turing Award for his work on APL in 1979.

Early (1990s) OLAP databases relied on creating and storing pre-computed multidimensional arrays of aggregated values, called OLAP cubes. These allowed for much faster analytical SQL queries than could be accomplished using the raw data. Using OLAP queries, analysts could provide C-level managers with daily reports, typically line printed at the computer center on green-and-white fanfold paper.

One problem with using OLAP for reports is that a one-day turnaround for reports is too long. Another is that OLAP cubes are expensive to compute, so that analysts typically recomputed them weekly, often on weekends when the mainframe wasn’t heavily utilized for routine business activities. That meant that reports could easily be based on week-old data, leading to a data freshness issue. Another issue with OLAP cubes is the increased storage needed for aggregated data.

Cube-based OLAP databases slowly lost customers as their drawbacks became apparent. By 2005, companies were adopting big data solutions for data analysis, including data lakes, Hadoop, and distributed query processing. Switching from OLAP cubes to distributed query processing resulted in improved flexibility, at a cost in query response times.

Another way to save time on complex analysis queries is to use materialized views. Essentially, a view is a virtual table built on-the-fly from a SQL query. A materialized view is a physical table built from a SQL query. Materialized views typically need to be refreshed periodically to keep them up-to-date. An indexed view, introduced in SQL Server 2000, is automatically refreshed when the underlying data changes. Materialized views trade disk space for time.

You can gain many of the benefits of OLAP cubes at lower cost by doing targeted partial aggregations. The classic example for an airline flight-time database is to save aggregations for high-volume routes, such as New York to Los Angeles, but compute them on the fly for low-volume routes, such as Fairbanks to Nome.

Getting to real-time analysis

These analytic techniques are all aimed at supporting small numbers of managers with overnight reports. What about providing real-time analytic answers to large numbers of customers? That was the issue at LinkedIn that spurred the development of Apache Pinot.

Three metrics needed to change drastically: latency, freshness, and concurrency. Latency for a report query can be 100 seconds; for a real-time query, it should be more like a hundredth of a second. People got away with week-old data in the 1990s, but these days data freshness for a report should be 100 seconds, and for a real-time query should be about 10 seconds. The number of concurrent users back in the day was a single digit; these days, there might be 100 concurrent internal users for a report generation app, and potentially a million simultaneous requests for a customer-facing app.

At LinkedIn, Apache Pinot is used for Who Viewed Your Profile, Talent Analytics, Company Analytics, and several other user-facing and internal functions. At Uber Eats, Pinot is used for user ordering queries, such as “What’s the soonest I can get a hamburger delivered?”, and for internal dashboards showing demand-supply, anomalous events (like delayed orders), and live orders.

If you’d like to see a video about this stuff, this one by Tim Berglund is a good place to start.

What Apache Pinot does

Apache Pinot is an open-source, distributed database for customer-facing, real-time analytics, ingesting data from various sources and executing queries using SQL. It is implemented in Java. Pinot’s SQL support is limited to DML (Data Manipulation Language). Pinot does data definition using JSON.

Apache Pinot can scale to thousands of nodes that respond to a query request in unison. It can ingest streams of millions of events per second, and it can make that data available immediately for queries. It can respond with low (< 100ms) latency without caching.

Apache Pinot supports user-facing analytics with the capability to process hundreds of thousands of simultaneous queries. It automatically replicates and distributes data among nodes for fault tolerance. Apache Pinot supports many kinds of indexes, including its signature tunable star-tree index. It stores data in a columnar format for fast analytic queries.

How Apache Pinot works

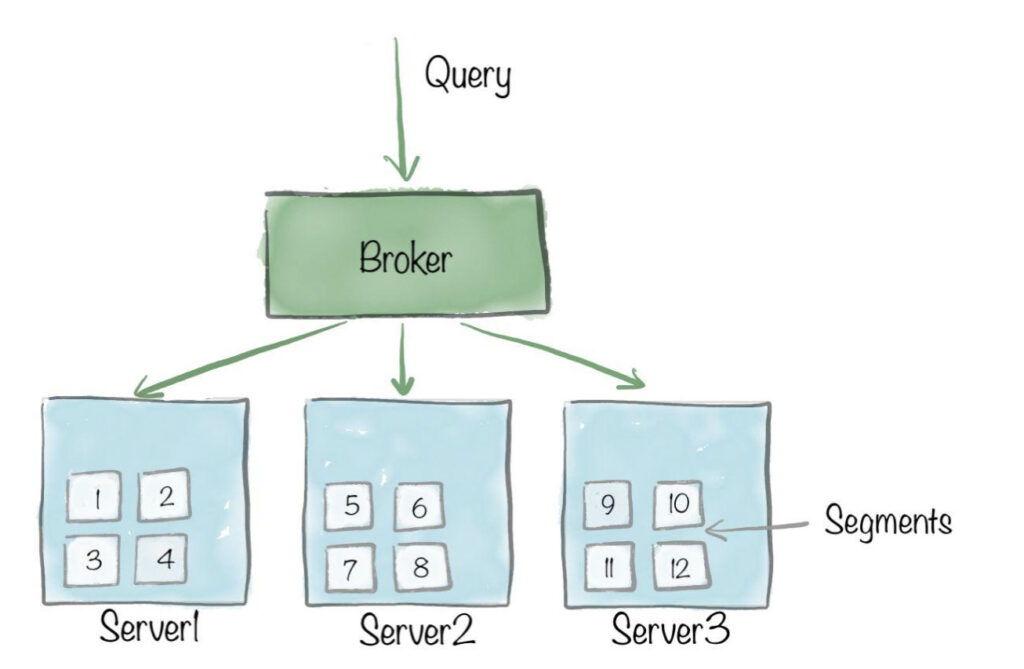

Essentially, Apache Pinot works by scattering SQL queries from a broker node out to server nodes, which store and process segments of a Pinot table. The results from the distributed servers come back to the broker, which merges them and sends that unified result back to the client.

Apache Pinot components:

- Pinot table: A logical abstraction that represents a collection of related data, that is composed of columns and rows (known as documents in Pinot).

- Pinot segments: Similar to a shard/partition, data for a Pinot table is broken into multiple small chunks that pack data in a columnar fashion along with dictionaries and indexes for the columns.

- Pinot servers: A node that is responsible for a set of Pinot segments, by storing them locally and processing them at query time.

- Pinot brokers: A node that receives user queries, scatters them to Pinot servers, and finally, merges and sends back the results gathered from Pinot servers.

StarTree

To speed up the query process and reduce the number of documents scanned, Apache Pinot applies optimizations at each level. At the broker, it does data partitioning, replica group segment assignment, and partition-aware query routing. At the servers, it applies metadata-based pruning or bloom filters. At the segments, it applies indexes for queries with filter predicates. It finally applies aggregation optimizations.

Apache Pinot indexes

All of these optimizations help, but it’s the indexes that make the most dramatic difference. Apache Pinot supports at least seven kinds of index, ranging from the common inverted index, sorted index, and range index, to the specialized star-tree index and JSON index.

Inverted index

An inverted index maintains a map of each column value to its location using a bitmap. For an aggregation query with a filter predicate (e.g., select count(*) … where ID=xxx) on a data set with ~3 billion rows without inverted indexing, the query had to do a full scan of 3 billion rows and took over 2.3s, whereas after applying an inverted index the latency dropped to just 12ms.

Sorted index

One column within a Pinot table can be configured to have a sorted index. Internally, it uses run-length encoding to capture the start and end location pointers for a given column value. The query use case and speed-up are similar to an inverted index.

Range index

A range index is a variant of an inverted index that can speed up range queries, i.e., queries with range predicates (e.g. column > value1, column <= value2). For our data set of ~3 billion rows, a range query took over 40s without a range index and 2.9s with one.

JSON index

Apache Pinot enables storing a JSON payload representing arbitrarily nested data as a String column. A JSON index can be applied to such columns to accelerate the value lookup and filtering for the column, a very powerful feature that lets you index all fields within your nested JSON blob. For a JSON query (e.g., select * … where JSON_MATCH(…)) on a 100 million row data set, it took over 17s without a JSON index and 10ms with one.

Text index

Apache Pinot provides the ability to do a regex-based text search or fuzzy text search on String columns using a text index. Internally, this is implemented using an Apache Lucene index. On a data set of ~3 billion rows, a text match query (e.g., select count(*) … where text_match(…)) took over 15s without a text index and 126ms with one.

Geospatial index

For applications like Uber Eats, a geographical query to find, say, restaurants within five miles of a location is a common use case. Apache Pinot implements a geospatial index using Uber’s open-sourced H3 library, a hexagonal hierarchical spatial Index. In one test, a query of the form select * … where st_distance(…) < $distance took 1s without an index and 50ms with a geospatial index.

Star-tree index

Star-tree is a special type of index that serves as a filter as well as an aggregation optimization technique. Star-tree takes in a list of dimensions and aggregation functions. For each unique dimension combination, star-tree pre-computes and stores the aggregate metric values. Star-tree lets you specify exactly which dimensions to pre-compute and how many values to pre-compute, giving you a trade-off between speed and space without having to create materialized views. An aggregation query example using full pre-computation on ~3 billion rows took over 31s without an index and 50ms with a star-tree index.

StarTree Cloud

Apache Pinot was developed at LinkedIn in 2013, and released to open source in 2015. In 2021, the developers founded StarTree, the company, named after the star-tree index, to accelerate the adoption of Apache Pinot. StarTree Cloud is a real-time analytics platform for user-facing applications powered by Apache Pinot and deployed globally on AWS, Google Cloud Platform, and Microsoft Azure. You can run it in your own environment or use the public StarTree Cloud.

StarTree Cloud lets you provision Apache Pinot clusters of different sizes, ingest data from real-time and batch data sources, and run analytics workloads with ultra-low latency. StarTree manages the underlying infrastructure for you, provides insights from a diverse set of data, and helps you make informed business decisions.

StarTree Cloud also includes tools for a better developer experience, including StarTree Data Manager (for data ingestion) and StarTree ThirdEye (for anomaly detection), along with additional key features to enhance Apache Pinot, including tiered storage and additional support for ingesting and managing data.

IDG

StarTree Data Manager

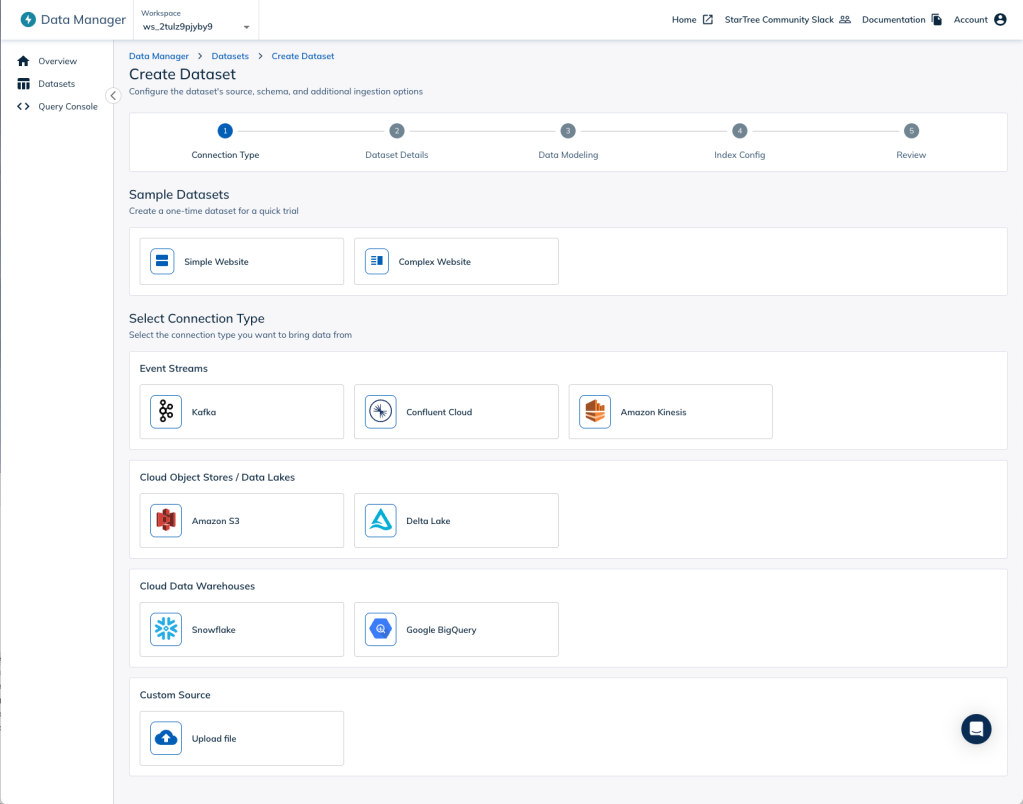

StarTree Data Manager enables easy data ingestion into Pinot tables with a visual, no-code interface, minimizing errors and saving time. It supports eight connectors, including Apache Kafka and Amazon S3, plus a customizable connection.

IDG

Other ingestions

In addition to StarTree Data Manager, StarTree can ingest from Google Cloud Pubsub, from a database that supports a SQL Connector, from files with known formats, from real-time segments, from Debezium-format change data capture, from Delta Lake, from DynamoDB Streams, and from Prometheus-formatted metrics data.

StarTree ThirdEye

StarTree ThirdEye is an anomaly detection, monitoring, and interactive root-cause analysis “all-in-one” platform. It comes with a self-serve UI experience. StarTree ThirdEye is available as a managed platform with StarTree Cloud. It can also be installed from a binary file, from sources, or using Docker.

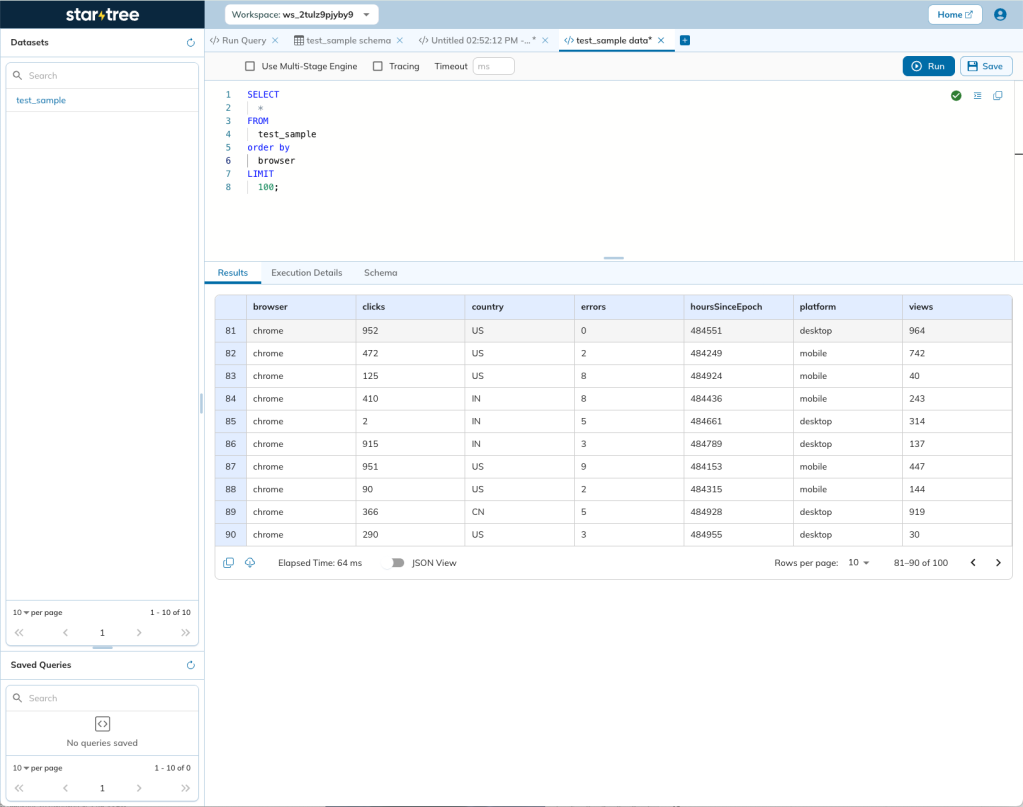

Hands-on with StarTree Cloud

It’s relatively easy, and free, to try out StarTree Cloud, so I did that before attempting to run Apache Pinot locally. Using some sample data supplied by StarTree, I was able to ingest the data with StarTree Data Manager and try out the StarTree Query Console in a matter of minutes.

I wasn’t impressed with the SQL-query-building capabilities of the console, but the query completion times were in the two-digit millisecond range even for analytic queries, which is impressive given that I was using the free tier. Of course, the sample data set only has 600K rows. I didn’t try to test with billions of rows, and I didn’t need to construct any indexes.

You can connect to data sets in the StarTree Cloud using a REST API, a Python client, a native Java client, or a Node.js client. StarTree warns that the Node.js client may not scale well.

You can visualize data hosted in the StarTree Cloud using Superset, Tableau, or Redash. The Redash solution involves a little Python coding.

You can upgrade to a production cloud by contacting StarTree directly, or by signing up on AWS, GCP, or Azure.

IDG

IDG

Hands-on with Apache Pinot

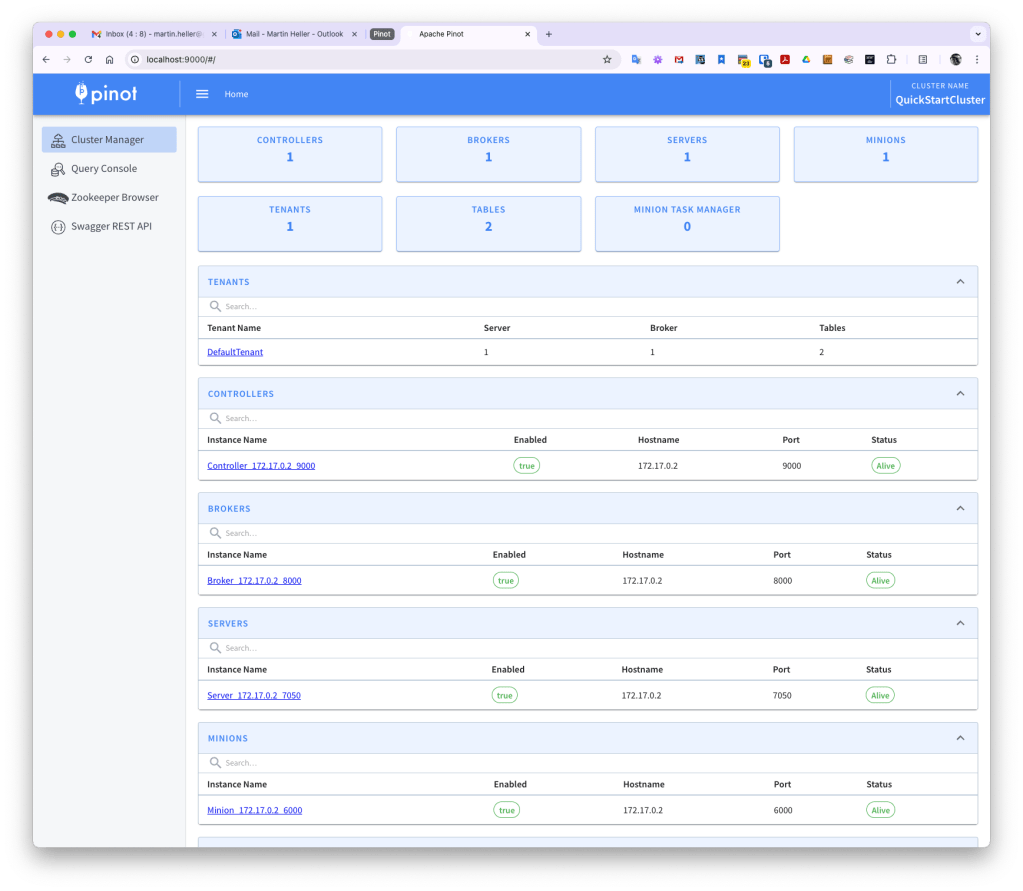

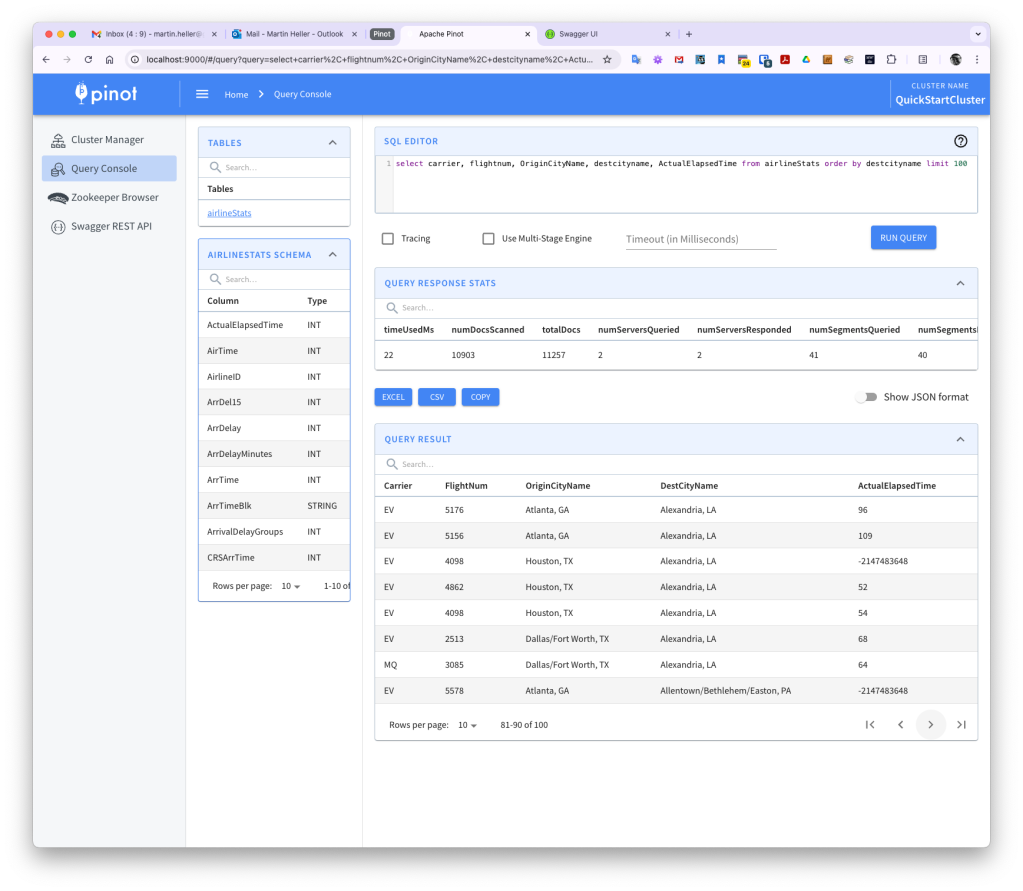

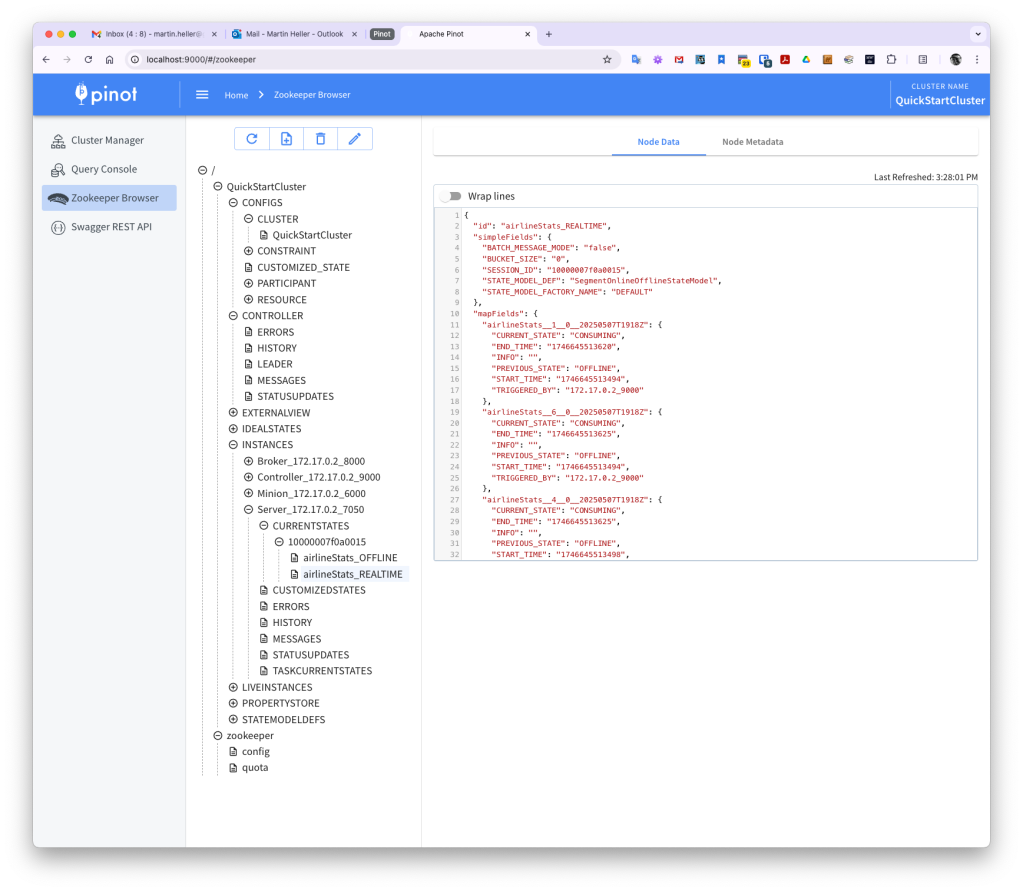

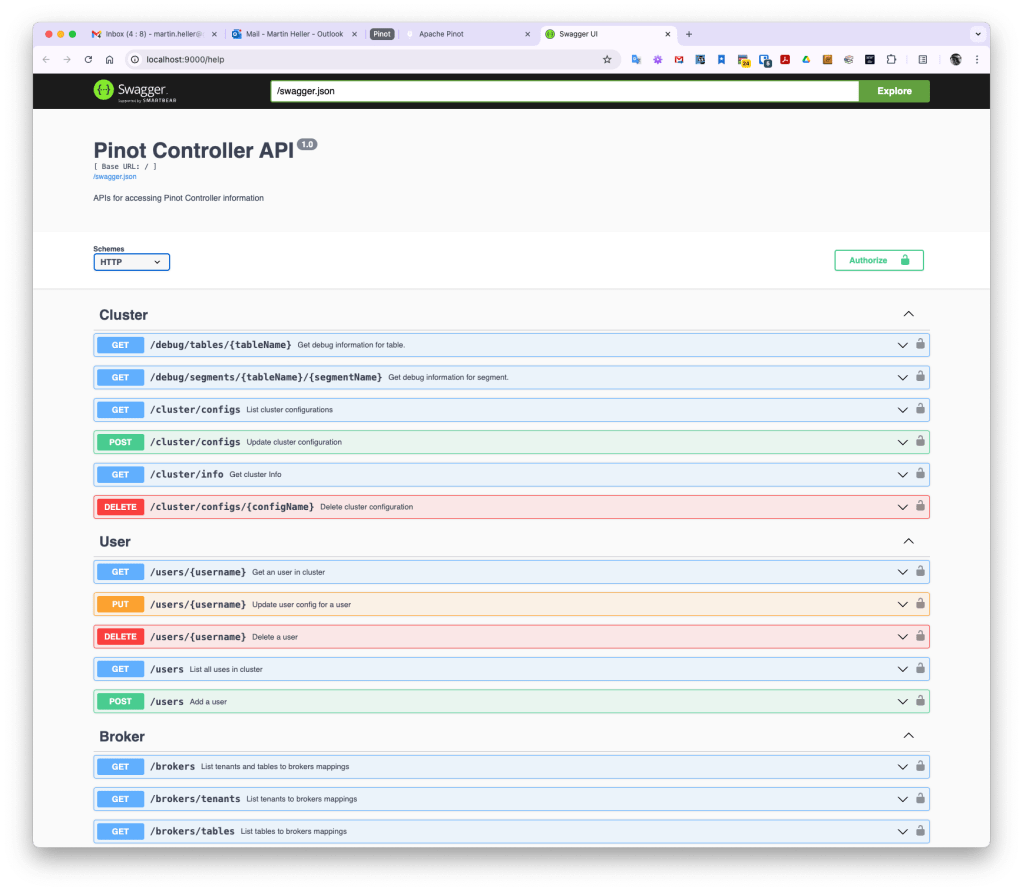

I ran the Apache Pinot hybrid quick start example, which demonstrates hybrid stream and batch processing using airline flight data, using Docker on my M4 Pro MacBook Pro. I allocated 6 CPUs (out of 12), 4 GB of RAM (out of 24), 1 GB of swap space, and a maximum of 60 GB of disk space to Docker.

Once I found the correct command (I was initially looking in the wrong place), it was fairly painless. I could also have run the Apache Pinot demo using Brew or launcher scripts.

The hybrid quick start example starts Apache Kafka, Apache ZooKeeper, Pinot controller, Pinot broker, and Pinot server, then creates an airlineStats schema, an airlineStats offline table, and an airlineStats real-time table that ingests messages from a Kafka topic. The data set had about 10K rows and almost 80 columns. The schema doesn’t seem to create any indexes. The screen shots below show some of the web pages.

Additional tutorials in the Apache Pinot getting started documentation show you how to create clusters, configure tables, ingest batch and streaming data, and configure indexes. You may also want to scan at least the titles of the Apache Pinot recipes, and try the Pizza Shop Demo, an analytic dashboard app.

IDG

IDG

IDG

IDG

Good, fast analytics

Overall, Apache Pinot and StarTree Cloud offer good, fast analytics capabilities, enabling real-time analytics for customers as well as internal users. Apache Pinot’s major distinguishing features over other columnar analytic databases are its plug-in indexes, star-tree index, and ability to combine batch data with real-time data.

Apache Pinot competitors include StarRocks, Rockset (acquired by OpenAI), Apache Druid, ClickHouse, and Snowflake, but they’re not all the same. Druid is focused on time-series data. ClickHouse is essentially a data warehouse. And Snowflake is a cloud-based data warehouse that is typically used for batch analytics, though it can also handle some real-time workloads.

Cost

Apache Pinot: Free open source. StarTree Cloud: Free, 100 GB storage; Standard, $999/month 1TB+ storage; Premium, $3,999/month 3TB+ storage. Own cloud (BYOC): Call sales. See https://startree.ai/pricing for details.

Platform

Java 11 or Java 21; AWS, Google Cloud Platform, and Microsoft Azure; Docker.

Bottom line

Apache Pinot and StarTree Cloud offer good, fast analytics capabilities, enabling real-time analytics for customers as well as internal users. Apache Pinot’s major distinguishing features over other columnar analytic databases are its plug-in indexes, star-tree index, and ability to combine batch data with real-time data.

Pros

- Open-source, distributed database for customer-facing real-time analytics

- Can scale to thousands of nodes

- Can respond with low (< 100ms) latency without caching

- Can process hundreds of thousands of simultaneous queries

- Supports many kinds of indexes, including its signature tunable star-tree index

Cons

- Many Java processes involved, coordinated by ZooKeeper

- SQL support is limited to DML; DDL is handled using JSON

If you found this article helpful, please support our YouTube channel Life Stories For You